Along with colleagues from the EmployID project, I’ve submitted a paper f to the workshop on Learning Analytics for Workplace and Professional Learning (LA for Work) at Learning Analytics and Knowledge Conference (LAK 2016) in April. Below is the text of teh paper (NB If you are interested, the orgnaisers are still accepting submissions for the workshop.

Along with colleagues from the EmployID project, I’ve submitted a paper f to the workshop on Learning Analytics for Workplace and Professional Learning (LA for Work) at Learning Analytics and Knowledge Conference (LAK 2016) in April. Below is the text of teh paper (NB If you are interested, the orgnaisers are still accepting submissions for the workshop.

ABSTRACT

In this paper, we examine issues in introducing Learning Analytics (LA) in the workplace. We describe the aims of the European EmployID research project which aims to use

Image: Educause

technology to facilitate identity transformation and continuing professional development in European Public Employment Services. We describe the pedagogic approach adopted by the project based on social learning in practice, and relate this to the concept of Social Learning Analytics. We outline a series of research questions the project is seeking to explore and explain how these research questions are driving the development of tools for collecting social LA data. At the same time as providing research data, these tools have been developed to provide feedback to participants on their workplace learning.

1. LEARNING ANALYTICS AND WORK BASED LEARNING

Learning Analytics (LA) has been defined as “the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environments in which it occurs.” [1]. It can assist in informing decisions in education education system, promote personalized learning and enable adaptive pedagogies and practices [2].

However, whilst there has been considerable research and development in LA in the formal school and higher education sectors, much less attention has been paid to the potential of LA for understanding and improving learning in the workplace. There are a number of possible reasons for this.

Universities and schools have tended to harvest existing data drawn from Virtual Learning Environments (VLEs) and to analyse that data to both predict individual performance and undertake interventions which can for instance reduce drop-out rates. The use of VLEs in the workplace is limited and “collecting traces that learners leave behind” [3] may fail to take cognizance of the multiple modes of formal and informal learning in the workplace and the importance of key indicators such as collaboration. Once more key areas such as collaboration tend to be omitted and in focusing on VLEs, a failure to include all the different modes of learning. Ferguson [4]) says that in LA implementation in formal education: “LA is aligned with clear aims and there are agreed proxies for learning.” Critically, much workplace learning is informal with little agreement of proxies for learning. While Learning Analytics in educational settings very often follow a particular pedagogical design, workplace learning is much more driven by demands of work tasks or intrinsic interests of the learner, by self-directed exploration and social exchange that is tightly connected to processes and the places of work [5]. Learning interactions at the workplace are to a large extent informal and practice based and not embedded into a specific and measurable pedagogical scenario.

Pardo and Siemens [6] point out that “LA is a moral practice and needs to focus on understanding instead of measuring.” In this understanding “learners are central agents and collaborators, learner identity and performance are dynamic variables, learning success and performance is complex and multidimensional, data collection and processing needs to be done with total transparency.” This poses particular issues within the workplace with complex social and work structures, hierarchies and power relations.

Despite these difficulties workplace learners can potentially benefit from being exposed to their own and other’s learning processes and outcomes as this potentially allows for better awareness and tracing of learning, sharing experiences, and scaling informal learning practices [5]. LA can, for instance, allow trainers and L & D professionals to assess the usefulness of learning materials, increase their understanding of the workplace learning environment in order to improve the learning environment and to intervene to advise and assist learners. Perhaps more importantly, it can assist learners in monitoring and understanding their own activities and interactions and participation in individual and collaborative learning processes and help them in reflecting on their learning.

There have been a number of early attempts to utilise LA in the workplace. Maarten de Laat [7] has developed a system based on Social Network Analysis to show patterns of learning and the impact of informal learning in Communities of Practice for Continuing Professional Development for teachers.

There is a growing interest in the use of MOOCs for professional development and workplace learning. Most (if not all) of the major MOOC platforms have some form of Learning Analytics built in providing both feedback to MOOC designers and to learners about their progress. Given that MOOCs are relatively new and are still rapidly evolving, MOOC developers are keen to use LA as a means of improving MOOC programmes. Research and development approaches into linking Learning Design with Learning Analytics for developing MOOCs undertaken by Conole [8] and Ferguson [9] amongst others have drawn attention to the importance of pedagogy for LA.

Similarly, there are a number of research and development projects around recommender systems and adaptive learning environments. LA is seen as having strong relations to recommender systems [10], adaptive learning environments and intelligent tutoring systems [11]), and the goals of these research areas. Apart from the idea of using LA for automated customisation and adaptation, feeding back LA results to learners and teachers to foster reflection on learning can support self-regulated learning [12]. In the workplace sphere LA could be used to support the reflective practice of both trainers and learners “taking into account aspects like sentiment, affect, or motivation in LA, for example by exploiting novel multimodal approaches may provide a deeper understanding of learning experiences and the possibility to provide educational interventions in emotionally supportive ways.” [13].

One potential barrier to the use of LA in the workplace is limited data. However, although obviously smaller data sets place limitations on statistical processes, MacNeill [14] stresses the importance of fast data, actionable data, relevant data and smart data, rather than big data. LA, she says, should start from research questions that arise from teaching practice, as opposed to the traditional approach of starting analytics based on already collected and available data. Gasevic, Dawson and Siemens [15] also draw attention to the importance of information seeking being framed within “robust theoretical models of human behavior” [16]. In the context of workplace learning this implies a focus on individual and collective social practices and to informal learning and facilitation processes rather than formal education. The next section of this paper looks at social learning in Public Employment Services and how this can be linked to an approach to workplace LA.

2. EMPLOYID: ASSISTING IDENTITY TRANSFORMATION THROUGH SOCIAL LEARNING IN EUROPEAN EMPLOYMENT SERVICES

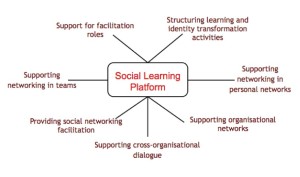

The European EmployID research project aims to support and facilitate the learning process of Public Employment Services (PES) practitioners in their professional identity transformation process. The aims of the project are born out of a recognition that to perform successfully in their job they need to acquire a set of new and transversal skills, develop additional competencies, as well as embed a professional culture of continuous improvement. However, it is unlikely that training programmes will be able to provide sufficient opportunities for all staff in public employment services, particularly in a period of rapid change in the nature and delivery of such services and in a period with intense pressure on public expenditures. Therefore, the EmployID project aims to promote, develop and support the efficient use of technologies to provide advanced coaching, reflection and networking services through social learning. The idea of social learning is that people learn through observing others behaviour, attitudes and outcomes of these behaviours, “Most human behaviour is learned observationally through modelling from observing others, one forms an idea of how new behaviours are performed, and on later occasions this coded information serves as a guide for action” [17]. Facilitation is seen as playing a key role in structuring learning and identity transformation activities and to support networking in personal networks, teams and organisational networks, as well as cross-organisational dialogue.

Social Learning initiatives developed jointly between the EmployID project and PES organisations include the use of MOOCs, access to Labour Market information, the development of a platform to support the emergence of communities of practice and tools to support reflection in practice.

Alongside such a pedagogic approach to social learning based on practice the project is developing a strategy and tools based on Social Learning Analytics. Ferguson and Buckingham Shun [18] say that Social Learning Analytics (SLA) can be usefully thought of as a subset of learning analytics approaches. SLA focuses on how learners build knowledge together in their cultural and social settings. In the context of online social learning, it takes into account both formal and informal educational environments, including networks and communities. “As groups engage in joint activities, their success is related to a combination of individual knowledge and skills, environment, use of tools, and ability to work together. Understanding learning in these settings requires us to pay attention to group processes of knowledge construction – how sets of people learn together using tools in different settings. The focus must be not only on learners, but also on their tools and contexts.”

Viewing learning analytics from a social perspective highlights types of analytic that can be employed to make sense of learner activity in a social setting. They go on to introduce five categories of analytic whose foci are driven by the implications of the changes in which we are using social technology for learning [18]. These include social network analysis focusing on interpersonal relations in social platforms, discourse analytics predicated on the use of language as a tool for knowledge negotiation and construction, content analytics particularly looking at user-generated content and disposition analytics saying intrinsic motivation to learn is a defining feature of online social media, and lies at the heart of engaged learning, and innovation.

The approach to Social Learning Analytics links to the core aims of the EmployID project to support and facilitate the learning process of PES practitioners in their professional identity development by the efficient use of technologies to provide social learning including advanced coaching, reflection, networking and learning support services. The project focuses on technological developments that make facilitation services for professional identity transformation cost-effective and sustainable by empowering individuals and organisations to engage in transformative practices, using a variety of learning and facilitation processes.

3. LEARNING ANALYTICS AND EMPLOYID – WHAT ARE WE TRYING TO FIND OUT?

Clearly there are close links between the development of Learning Analytics and our approach to evaluation within EmployID. In order to design evaluation activities the project has developed a number of overarching research questions around professional development and identity transformations with Public Employment Services. One of these research questions is focused on LA: Which forms of workplace learning analytics can we apply in PES and how do they impact the learner? How can learning analytics contribute to evaluate learning interventions? Other focus on the learning environment and the use of tools for reflection, coaching and creativity as well as the role of the wider environment in facilitating professional identity transformation. A third focus is how practitioners manage better their own learning and gain the necessary skills (e.g. self-directed learning skills, career adaptability skills, transversal skills etc.) to support identity transformation processes as well as facilitating the learning of others linking individual, community and organizational learning.

These research questions also provide a high level framework for the development of Learning Analytics, embedded within the project activities and tools. And indeed much of the data collected for evaluation purposes also can inform Learning Analytics and vice versa. However, whilst the main aim of the evaluation work is measure the impact of the EmployID project and for providing useful formative feedback for development of the project’s tools and overarching blended learning approach, the Learning Analytics focus is understanding and optimizing learning and the environments in which it occurs.

4. FROM A THEORETICAL APPROACH TO DEVELOPING TOOLS FOR LA IN PUBLIC EMPLOYMENT SERVICES

Whilst the more practical work is in an initial phase, linked to the roll out of tools and platforms to support learning, a number of tools are under development and will be tested in 2016. Since this work is placed in the particular working environment of public administration, the initial contextual exploration led to a series of design considerations for the suggested LA approaches presented below. The access to fast, actionable, relevant and smart data is most importantly regulated by strict data protection and privacy aspects, that are crucial and clearly play a critical role in any workplace LA. As mentioned above power relations and hierarchies come into play and the full transparency to be aspired with LA might either be hindered by existing structures or raise expectations that are not covered by existing organisations structures and process. If efficient learning at the workplace becomes transparent and visible through intelligent LA, what does this mean with regard to career development and promotion? Who has access to the data, how are they linked to existing appraisal systems or is it perceived as sufficient to use the analytics for individual reflection only? For the following LA tools a trade-off needs to be negotiated and their practicality in workplace setting can only be assessed when fully implemented. Clear rules about who has access to the insight gained from LA have to be defined. The current approach in EmployID is thus to focus on the individual learner as the main recipient of LA.

4.1 Self-assessment questionnaire

The project has developed a self-assessment questionnaire as an instrument to collect data from EmployID interventions in different PES organisations to support reflection on personal development. It contains a core set of questions for cross-case evaluation and LA on a project level as well as intervention-specific questions that can be selected to fit the context. The self-assessment approach should provide evidence for the impact of EmployID interventions whilst addressing the EmployID research questions, e.g. the effectiveness of a learning environment in the specific workplace context. Questions are related to professional identity transformation, including individual, emotional, relational and practical development. For the individual learner the questionnaire aims to foster their self-reflection process. It supports them in observing their ‘distance travelled’ in key aspects of their professional identity development. Whilst using EmployID platforms and tools, participants will be invited to fill in the questionnaire upon registration and then at periodic intervals. Questions and ways of presenting the questionnaire questions are adapted to the respective tool or platform, such as social learning programmes, reflective community, or peer coaching.

The individual results and distance travelled over the different time points will be visualised and presented to individual participants in the form of development curves based on summary categories to stimulate self-reflection on learning. These development curves show the individual learners’ changes in their attitudes and behaviour related to learning and adaptation in the job, the facilitation of colleagues and clients, as well as the personal development related to reflexivity, stress management and emotional awareness.

4.2 Learning Analytics and Reflective Communities

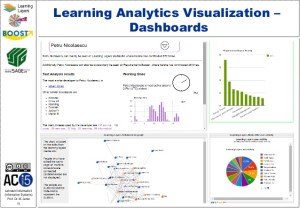

The EmployID project is launching a platform to support the development of a Reflective in the Slovenian PES in February, 2016. The platform is based on the WordPress Content Management System and the project has developed a number of plug ins to support social learning analytics and reflection analytics. The data from these plugins can serve as the basis for a dashboard for learners providing visualisations of different metrics

4.2.1 Network Maps

This plugin visualizes user interactions in social networks including individual contacts, activities, and topics. The data is visualised through a series of maps and is localised through different offices within the PES. The interface shows how interaction with other users has changed during the last 30 days. This way users can visually “see” how often they interact with others and possibly find other users with whom they wish to interact.

The view can be filtered by different job roles and is designed to help users find topics they may be interested in.

4.2.2 Karma Points

The Karma Points plugin allows users to give each other ‘Karma points’ and ‘reputation points’. It is based both on rankings of posts and of authors. Karma points are temporary and expire after a week but are also refreshed each week. This way users can only donate karma points to a few selected posts in each week. The user who receives a karma point gets the point added to her permanent reputation points.

4.2.3 Reflection Analytics

The Reflection Analytics plugin collects platform usage data and shows it in an actionable way to users. The purpose of this is to show people information in order to let them reflect about their behaviour in the platform and then possibly to give them enough information to show them how they could learn more effectively. The plugin will use a number of different charts, each wrapped in a widget in order to retain customizability.

One chart being considered would visualise the role of the user’s interaction in the current month in terms of how many posts she wrote, how many topics she commented on and how many topics she read compared to the average of the group. This way, users can easily identify whether they are writing a similar number of topics as their colleagues. It shows change over time and provides suggestions for new activities. However, we also recognise that comparisons with group averages can be demotivating for some people.

4.3 Content Coding and Analysis

The analysis of comments and content shared within the EmployID tools can provide data addressing a number of the research questions outlined above.

A first trial of content coding used to analyse inputs into a pilot MOOC held in early 2015 using the FutureLearn platform resulted in rich insights about aspects of identity transformation and learning from and with others. The codes for this analysis were created inductively based on [19] and then analysed according to success factors for identity transformation. Given that identity transformation in PES organisations is a new field of research we expect new categories to evolve over time.

In addition to the inductive coding the EmployID project will apply deductive analysis to investigate the reflection in content of the Reflective Community Platform following a fixed coding scheme for reflection [20].

Similar to the coding approach applied for reflective actions we are currently working on a new coding scheme for learning facilitation in EmployID. Based on existing models of facilitation (e.g. [21]) and facilitation requirements identified within the PES organisations, a fixed scheme for coding will be developed and applied the first time for the analysis of content shared in the Reflective Community platform.

An important future aspect of content coding is going one step further and exploring innovative methodological approaches, trialing with a machine learning approach based on (semi-) automatic detection of reflection and facilitation in text. This semi-automatic content analysis is a prerequisite for reflecting analysis back to learners as part of learning analytics, as it allows the analysis of large amounts of shared content, in different languages and not only ex-post, but continually in real time.

4.4 Dynamic Social Network Analysis

Conceptual work being currently undertaken aims to bring together Social Network Analysis and Content Analysis in an evolving environment in order to analyze the changing nature and discontinuities in a knowledge development and usage over time. Such a perspective would not only enable a greater understanding of knowledge development and maturing within communities of practice and other collaborative learning teams, but would allow further development and improvements to the (online) working and learning environment.

The methodology is based on various Machine Learning approaches including content analysis, classification and clustering [22], and statistical modelling of graphs and networks with a main focus on sequential and temporal non-stationary environments [23].

To illustrate changes of nature and discontinuities at the level of social network connectivity and content of communications in a knowledge maturing process “based on the assumption that learning is an inherently social and collaborative activity in which individual learning processes are interdependent and dynamically interlinked with each other: the output of one learning process is input to the next. If we have a look at this phenomenon from a distance, we can observe a knowledge flow across different interlinked individual learning processes. Knowledge becomes less contextualized, more explicitly linked, easier to communicate, in short: it matures.” [24]

5. NEXT STEPS

In this paper we have examined current approaches to Learning Analytics and have considered some of the issues in developing approaches to LA for workplace learning, notably that learning interactions at the workplace are to a large extent informal and practice based and not embedded into a specific and measurable pedagogical scenario. Despite that, we foresee considerable benefits through developing Workplace Learning Analytics in terms of better awareness and tracing of learning, sharing experiences, and scaling informal learning practices.

We have outlined a pedagogic approach to learning in European Public Employment Services based on social learning and have outlined a parallel approach to LA based on Social Learning Analytics. We have described a number of different tools for workplace Learning Analytics aiming at providing data to assist answering a series of research questions developed through the EmployID project. At the same time as providing research data, these tools have been developed to provide feedback to participants on their workplace learning.

The tools are at various stages of development. In the next phase of development, during 2016, we will implement and evaluate the use of these tools, whilst continuing to develop our conceptual approach to Workplace Learning Analytics.

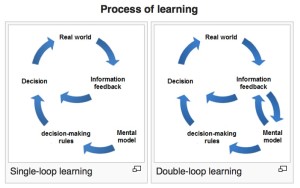

One essential part of this conceptual approach is that supporting learning of individuals with learning analytics is not just as designers of learning solutions how to present dashboards, visualizations and other forms of data representation. The biggest challenge of workplace learning analytics (but also learning analytics in general) is to support learners in making sense of the data analysis:

- What does an indicator or a visualization tell about how to improve learning?

- What are the limitations of such indicators?

- How can we move more towards evidence-based interventions

And this is not just a individual task; it requires collaborative reflection and learning processes. The knowledge of how to use learning analytics results for improving learning also needs to evolve through a knowledge maturing process. This corresponds to Argyris & Schön’s double loop learning [25]. Otherwise, if learning analytics is perceived as a top-down approach pushed towards the learner, it will suffer from the same problems as performance management. These pre-defined indicators (through their selection, computation, and visualization) implement a certain preconception which is not evaluated on a continuous basis by those involved in the process. Misinterpretations and a misled confidence in numbers can disempower learners and lead to an overall rejection of analytics-driven approaches.

ACKNOWLEDGEMENTS

EmployID – “Scalable & cost-effective facilitation of professional identity transformation in public employment services” – is a research project supported by the European Commission under the 7th Framework Program (project no. 619619).

REFERENCES

[1] SoLAR(2011).Open Learning Analytics: An Integrated & Modularized Platform. WhitePaper.Society for Learning Analytics Research. Retrieved from http://solaresearch.org/OpenLearningAnalytics.pdf

[2] Johnson, L. Adams Becker, S., Estrada, V., Freeman, A. (2014). NMC Horizon Report: 2014 Higher Education Edition. Austin, Texas: The New Media Consortium

[3] Duval E. (2012) Learning Analytics and Educational Data Mining, Erik Duval’s Weblog, 30 January 2012, https://erikduval.wordpress.com/2012/01/30/learning-analytics-and-educational-data-mining/

[4] Ferguson, R. (2012) Learning analytics: drivers, developments and challenges. In: International Journal of Technology Enhanced Learning, 4(5/6), 2012, pp. 304-317.

[5] Ley T. Lindstaedt S., Klamma R. and Wild S. (2015) Learning Analytics for Workplace and Professional Learning, http://learning-layers.eu/laforwork/

[6] Pardo A. and Siemens G. (2014) Ethical and privacy principles for learning analytics in British Journal of Educational Technology Volume 45, Issue 3, pages 438–450, May 2014

[7] de Laat M. & Schreurs (2013) Visualizing Informal Professional Development Networks: Building a Case for Learning Analytics in the Workplace, In American Bahavioral Scientist http://abs.sagepub.com/content/early/2013/03/11/0002764213479364.abstract

[8] Conole G. (2014) The implciations of open practice, presentation, Slideshare, http://www.slideshare.net/GrainneConole/conole-hea-seminar

[9] Ferguson (2015) Learning Design and Learning Analytics, Presentation, Slideshare http://www.slideshare.net/R3beccaF/learning-design-and-learning-analytics-50180031

[10] Adomavicius, G. and Tuzhilin, A. (2005) Toward the Next Generation of Recommender Systems: A Survey of the State-of-the-Art and Possible Extensions. IEEE Transactions on Knowledge and Data Engineering, 17, 734-749. http://dx.doi.org/10.1109/TKDE.2005.99

[11] Brusilovsky, P. and Peylo, C. (2003) Adaptive and intelligent Web-based educational systems. In P. Brusilovsky and C. Peylo (eds.), International Journal of Artificial Intelligence in Education 13 (2-4), Special Issue on Adaptive and Intelligent Web-based Educational Systems, 159-172.

[12] Zimmerman B. J, (2002) Becoming a self-regulated learner: An overview, in Theory into Practice, Volume: 41 Issue: 2 Pages: 64-70

[13] Bahreini K, Nadolski & Westera W. (2014) Towards multimodal emotion recognition in e-learning environments, Interactive Learning environments, Routledge, http://www.tandfonline.com/doi/abs/10.1080/10494820.2014.908927

[14] MacNeill, S. (2015) The sound of learning analytics, presentation, Slideshare, http://www.slideshare.net/sheilamac/the-sound-of-learning-analytics

[15] Gašević, D., Dawson, S., Siemens, G. (2015) Let’s not forget: Learning Analytics are about learning. TechTrends

[16] Wilson, T. D. (1999). Models in information behaviour research. Journal of Documentation, 55 (3), pp 249-70

[17] Bandura, A. (1977). Social Learning Theory. Englewood Cliffs, NJ: Prentice Hall.

[18] Buckingham Shum, S., & Ferguson, R. (2012). Social Learning Analytics. Educational Technology & Society, 15 (3), 3–26

[19] Mayring, P. (2000). Qualitative Content Analysis. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 1(2). Retrieved from http://nbn-resolving.de/urn:nbn:de:0114-fqs0002204

[20] Prilla M, Nolte A, Blunk O, et al (2015) Analyzing Collaborative Reflection Support: A Content Analysis Approach. In: Proceedings of the European Conference on Computer Supported Cooperative Work (ECSCW 2015).

[21] Hyland, N., Grant, J. M., Craig, A. C., Hudon, M., & Nethery, C. (2012). Exploring Facilitation Stages and Facilitator Actions in an Online/Blended Community of Practice of Elementary Teachers: Reflections on Practice (ROP) Anne Rodrigue Elementary Teachers Federation of Ontario. Copyright© 2012 Shirley Van Nuland and Jim Greenlaw, 71.

[22] Yeung, K. Y. and Ruzzo W.L. (2000). An empirical study on principal component analysis for clustering gene expression data. Technical report, Department of Computer Science and Engineering, University of Washington.http://bio.cs.washington.edu/supplements/kayee/pca.pdf

[23] Mc Culloh, I. and Carley, K. M. (2008). Social Network Change Detection. Institute for Software Research. School of Computer Science. Carnegie Mellon University. Pittsburgh, PA 15213. CMU-CS-08116.

http://www.casos.cs.cmu.edu/publications/papers/CMU-CS-08-116.pdf

[24] R. Maier, A. Schmidt. Characterizing Knowledge Maturing: A Conceptual Process Model for Integrating E-Learning and Knowledge Management In: Gronau, Norbert (eds.): 4th Conference Professional Knowledge Management – Experiences and Visions (WM ’07), Potsdam, GITO, 2007, pp. 325-334.

http://knowledge-maturing.com/concept/knowledge-maturing-phase-model

[25] Argyris, C./ Schön, D. (1978): Organizational Learning: A theory of action perspective. Reading.