Open Accreditation – a model

Can we develop an Open Accreditation system. What would we be looking for. In this post Jenny Hughes looks at criteria for a robust and effective cccreditation system.

Can we develop an Open Accreditation system. What would we be looking for. In this post Jenny Hughes looks at criteria for a robust and effective cccreditation system.

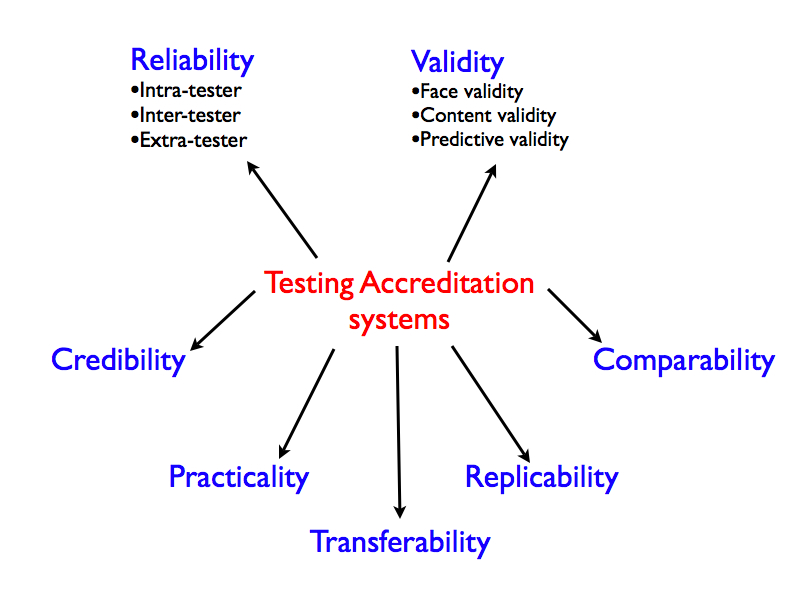

An accreditation system depends on the robustness of the assessment system on which it is based.

Imagine you were in a shop that sold accreditation / assessment systems ‘off-the-peg” – what would criteria would you use if you went in to buy one?

Reliability

Reliability is a measure of consistency. A robust assessment system should be reliable; that is, it should be based on an assesssment process that yields the same results irrespective of who is conducting it or the environmental conditions under which it is taking place. Intra-tester reliability simply means that if the same asessor is assessing performance his or her judgement should be consistent and not influenced by, for example, another learner they might have just assessed or whether they feel unwell or just in a bad mood! Inter-tester reliability means that if two different assessors were given exactly the same questions, data collection tools, output data and so on, their conclusions should also be the same. Extra-tester reliability means that the assessor’s conclusions should not be influenced by extraneous circumstances, which should have no bearing on the assessment object.

Validity

Validity is a measure of ‘appropriateness’ or ‘fitness for purpose’. There are three sorts of validity. Face validity implies a match between what is being assessed or tested and how that is being done. For example, if you are assessing how well someone can bake a cake or drive a car then you would probably want them to actually do it rather than write an essay about it! Content validity means that what you are testing is actually relevant, meaningful and appropriate and there is a match between what the learner is setting out to do and what is being assessed. If an assessment system has predictive validity it means that the results are still likely to hold true even under conditions that are different from the test conditions. For example, performance assessment of airline pilots who are trained to cope with emergency situations on a simulator must be very high on predictive validity.

Replicability

Ideally an assessment should be carried out and documented in a way which is transparent and which allows the assessment to be replicated by others to achieve the same outcomes. Some ‘subjectivist’ approaches to assessment would disagree, however.

Transferability

Although each assessment should be designed around a particular piece of learning, a good assessment system is one which could be adapted for similar situations or could be extended easily to new activities. That is, if your situation evolves and changes over a period of time in response to need, it would be useful if you didn’t have to rethink your entire assessment system. Transferability is about the shelf-life of the assessment and also about maximising its usefulness

Credibility

People actually have to believe in yourassessment! It needs to be authentic, honest, transparent and ethical. If you have even one group of stakeholders questioning the rigour of the assessment process or doubting the results or challenging the validity of the conclusions, the assessment loses credibility and is not worth doing.

Practicality

This means simply that however sophisticated and technically sound the assessment is, if it takes too much of people’s time or costs too much or is cumbersome to use or the products are inappropriate then it is not a good assessment system !

Comparability

Although an assessment system should be customised to meet the needs of particular learning events, a good assessment system should also take into account the wider assessment ‘environment’ in which the learning is located. For example, if you are working in an environment where assessment is normally carried out by particular people (e.g teachers, lecturers) in a particular institution (e.g school or university) where ‘criteria reference assessment is the norm, then if you undertake a radically different type of assessment you may find that your audience will be less receptive and your results less acceptable. Similarly, if the learning that is being assessed is part of a wider system and everyone else is using a different system then this could mean that your input is ignored simply because it is too difficult to integrate.

Also, if you are trying to compare performance from one year to the next or compare learning outcomes with other people, then this needs to be taken into account.

I think I might want to add ‘scale-ability’ – a bit like a sub division of transferability but meaning a system which can cope with ‘big bits’ of learning and also ‘small bits’ of learning. Am also thinking about flexibility as another sub-division just meaning the extent to which you can stretch the system without breaking it. If anyone has any more ‘-bilities’, let me know.

Also, I am using the word ‘accreditation’ strictly in the sense of systems for recognition of learning NOT in the sense of programme or course accreditation i.e individual (…does it need to be individual?) achievement not institutional licence to practice. ‘Assessment’ is also used in its broadest sense to include a wide range of strategies not simply end-testing.

Just as an aside….not many people know this…but I first met Graham when he was one of my students on an initial training course for adult education teachers. On the first day of that course, every year, I used to ask the students what things were likely to act as barriers to their learning. Almost without exception people used to say ‘worrying about whether I pass or fail’. The solution was really easy – the first thing I used to do on the first morning was to present them with their signed certificates and then tell them to stop worrying. It did actually work.

I also told them they could give it back at the end if they thought they didn’t deserve it. (One woman did – and came back the next year. Coral…if you ever read this, that was awesome!)

The moderators had a bit of a problem with it but couldn’t really argue their point unless they had ‘evidence’ that the students were not competent at the end of the course. They are probably still looking for Graham…..