Time to return to the Wales Wide Web after something of a hiatus in November and December. And I am looking forward to writing regular posts here again.

Time to return to the Wales Wide Web after something of a hiatus in November and December. And I am looking forward to writing regular posts here again.

New year is a traditional time for reviewing the past year and predicting the future. I have never really indulged in this game but have spent the last two days undertaking a “landscape study” as part of an evaluation contract I am working on. And one section of it is around emerging technologies and foresight. So here is that section. I lay no claim to scientific methodology or indeed to comprehensiveness – this is just my take on what is going on – or not – and what might go on. In truth, I think the main conclusion is that very little is changing in the use of ICT for learning (perhaps more on that tomorrow).

There are at any time a plethora of innovations and emerging developments in technology with the potential to impact on education, both in terms of curriculum and skills demands but also in their potential for teaching and learning. At the same time, educational technology has a tendency towards a ‘hype’ cycle, with prominence for particular technologies and approaches rising and fading. Some technologies, such as virtual worlds fade and disappear; others retreat from prominence only to re-emerge in the future. For that reason, foresight must be considered not just in terms of emerging technologies but in likely future uses of technologies, some which have been around some time, in education.

Emerging innovations on the horizon at present include the use of Big Data for Learning Analytics in education and the use of AI for Personalised Learning (see below); and MOOCS continue to proliferate.

VLEs and PLEs

There is renewed interest in a move from VLEs to Personal Learning Environments (PLE), although this seems to be reflected more in functionality for personalising VLEs than the emergence of new PLE applications. In part, this may be because of the need for more skills and competence from learners for self-directed learning than for the managed learning environment provided by VLEs. Personal Learning Networks have tended to be reliant on social networking application such as Facebook and Twitter. These have been adversely affected by concerns over privacy and fake news as well as realisation of the echo effect such applications engender. At the same time, there appears to be a rapid increase in the use of WhatsApp to build personal networks for exchanging information and knowledge. Indeed, one area of interest in foresight studies is the appropriation of commercial and consumer technologies for educational purposes.

Multi Media

Although hardly an emerging technology, the use of multimedia in education is likely to continue to increase, especially with the ease of making video. Podcasting is also growing rapidly and is like to have increasing impact in the education sector. Yet another relatively mature technology is the provision of digital e-books which, despite declining commercial sales, offer potential savings to educational authorities and can provide enhanced access to those with disabilities.

The use of data for policy and planning

The growing power of ICT based data applications and especially big data and AI are of increasing importance in education.

One use is in education policy and planning, providing near real-time intelligence in a wide number of areas including future numbers of school age children, school attendance, attainment, financial and resource provision and for TVET and Higher Education demand and provision in different subjects as well as providing insights into outcomes through for instance post-school trajectories and employment. More controversial issues is the use of educational data for comparing school performance, and by parents in choosing schools for their children.

Learning Analytics

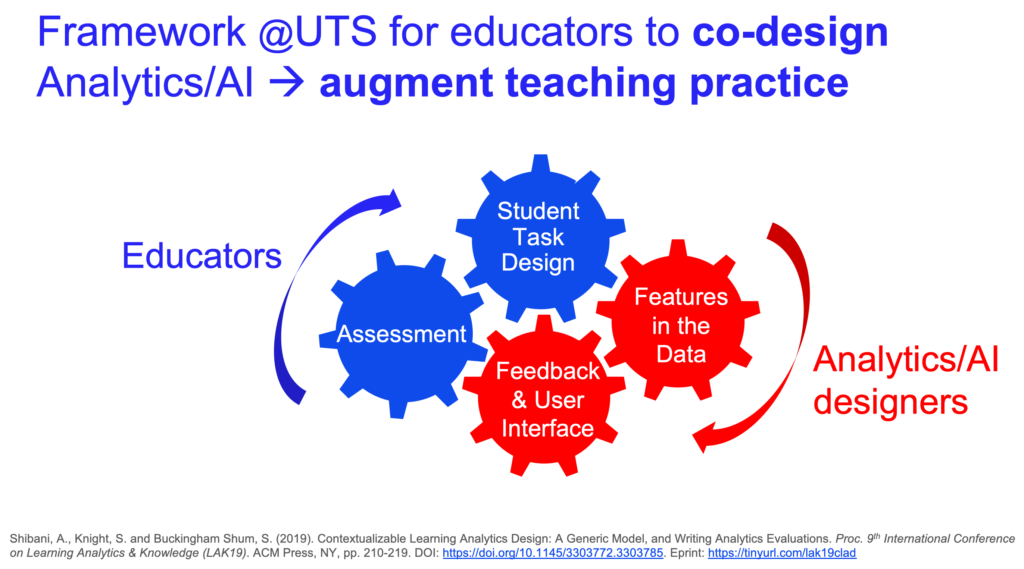

A further rapid growth area is Learning Analytics (LA). LA has been defined as “the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environments in which it occurs.” [Reference] It is seen as assisting in informing decisions in education systems, promoting personalized learning and enabling adaptive pedagogies and practices. At least in the initial stages of development and use, Universities and schools have tended to harvest existing data drawn from Virtual Learning Environments (VLEs) and to analyse that data to both predict individual performance and undertake interventions which can for instance reduce drop-out rates. Other potential benefits include that LA can, for instance, allow teachers and trainers to assess the usefulness of learning materials, to increase their understanding of the learning environment in order to improve it, and to intervene to advise and assist learners. Perhaps more importantly, it can assist learners in monitoring and understanding their own activities and interactions and participation in individual and collaborative learning processes and help them to reflect on their learning.

Pardo and Siemens (YEAR?) point out that “LA is a moral practice and needs to focus on understanding instead of measuring.” In this understanding:

“learners are central agents and collaborators, learner identity and performance are dynamic variables, learning success and performance is complex and multidimensional, data collection and processing needs to be done with total transparency.”

Although initially LA has tended to be based on large data sets already available in universities, school based LA applications are being developed using teacher inputted data. This can allow teachers and understanding of the progress of individual pupils and possible reasons for barriers to learning.

Gamification

Educational games have been around for some time. The gamification of educational materials and programmes is still in its infancy and likely to continue to advance. Another educational technology due for a revival is the development and use of e-Portfolios, as lifelong learning becomes more of a reality and employers seek evidence of job seekers current skills and competence.

Bite sized Learning

A further response to the changing demands in the workplace and the need for new skills and competence is “bite–sized” learning through very short learning modules. A linked development is micro-credentialing be it through Digital Badges or other forms of accreditation.

Learning Spaces

As ICT is increasingly adopted within education there will be a growing trend for redesigning learning spaces to reflect the different ways in which education is organised and new pedagogic approaches to learning with ICT. This includes the development of “makerspaces”. A makerspace is a collaborative work space inside a school, library or separate public/private facility for making, learning, exploring and sharing. Makerspaces typically provide access to a variety of maker equipment including 3D printers, laser cutters, computer numerical control (CNC) machines, soldering irons and even sewing machines.

Augmented and Virtual Reality

Despite the hype around Augmented Reality (AR) and Virtual Reality (VR), the present impact on education appears limited although immersive environments are being used for training in TVET and augmented reality applications are being used in some occupational training. In the medium-term mixed reality may become more widely used in education.

Wearables

Similarly, there is some experimentation in the use of wearable devices for instance in drama and the arts but widespread use may be some time away.

Block Chain

The block chain has been developed for storing crypto currencies and is attracting interest form educational technologists. Block chain is basically a secure ledger allowing the secure recording of a chain of data transactions. It has been suggested as a solution to the verification and storage of qualifications and credentials in education and even for recording the development and adoption of Open Educational Resources. Despite this, usage in education is presently very limited and there are quite serious technical barriers to its development and wider use.

The growing power of ICT based data applications and especially big data and AI (see section 10, below) are of increasing importance in education.

The use of data for policy and planning

One use is in education policy and planning, providing near real-time intelligence in a wide number of areas including future numbers of school age children, school attendance, attainment, financial and resource provision and for TVET and Higher Education demand and provision in different subjects as well as providing insights into outcomes through for instance post-school trajectories and employment. More controversial issues is the use of educational data for comparing school performance, and by parents in choosing schools for their children.

Learning Analytics

A rapid growth area is Learning Analytics (LA). LA has been defined as “the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environments in which it occurs.” [Reference] It is seen as assisting in informing decisions in education systems, promoting personalized learning and enabling adaptive pedagogies and practices. At least in the initial stages of development and use, Universities and schools have tended to harvest existing data drawn from Virtual Learning Environments (VLEs) and to analyse that data to both predict individual performance and undertake interventions which can for instance reduce drop-out rates. Other potential benefits include that LA can, for instance, allow teachers and trainers to assess the usefulness of learning materials, to increase their understanding of the learning environment in order to improve it, and to intervene to advise and assist learners. Perhaps more importantly, it can assist learners in monitoring and understanding their own activities and interactions and participation in individual and collaborative learning processes and help them to reflect on their learning.

Pardo and Siemens point out that “LA is a moral practice and needs to focus on understanding instead of measuring.” In this understanding:

“learners are central agents and collaborators, learner identity and performance are dynamic variables, learning success and performance is complex and multidimensional, data collection and processing needs to be done with total transparency.”

Although initially LA has tended to be based on large data sets already available in universities, school based LA applications are being developed using teacher in putted data. This can allow teachers and understanding of the progress of individual pupils and possible reasons for barriers to learning.

Artificial Intelligence

In research undertaken for this report, a number of interviewees raised the importance of Artificial Intelligence in education (although a number also believed it to be over hyped).

A recent report from the EU Joint Research Council (2018) says that:

“in the next years AI will change learning, teaching, and education. The speed of technological change will be very fast, and it will create high pressure to transform educational practices, institutions, and policies.”

It goes on to say AI will have:

“profound impacts on future labour markets, competence requirements, as well as in learning and teaching practices. As educational systems tend to adapt to the requirements of the industrial age, AI could make some functions of education obsolete and emphasize others. It may also enable new ways of teaching and learning.”

However, the report also considers that “How this potential is realized depends on how we understand learning, teaching and education in the emerging knowledge society and how we implement this understanding in practice.” Most importantly, the report says, “the level of meaningful activity—which in socio-cultural theories of learning underpins advanced forms of human intelligence and learning—remains beyond the current state of the AI art.”

Although AI systems are well suited to collecting informal evidence of skills, experience, and competence from open data sources, including social media, learner portfolios, and open badges, this creates both ethical and regulatory challenges. Furthermore, there is a danger that AI could actually replicate bad pedagogic approaches to learning.

The greatest potential of many of these technologies may be for informal and non-formal learning, raising the challenge of how to bring together informal and formal learning and to recognise the learning which occurs outside the classroom.

Sipping a glass of wine on the terrace last night, I thought about writing an article about proxies. I’ve become a bit obsessed about proxies, ever since looking at the way Learning Analytics seems to so often equate learning with achievement in examinations.

Sipping a glass of wine on the terrace last night, I thought about writing an article about proxies. I’ve become a bit obsessed about proxies, ever since looking at the way Learning Analytics seems to so often equate learning with achievement in examinations.